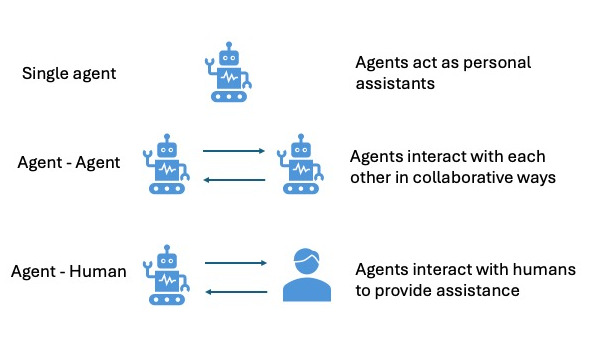

If there is one area in which news is produced daily, it is the area of artificial intelligence. New models, new techniques, (new memes), new tools… are appearing day after day, making ecosystems more and more capable. Within all these advances, there are two protocols that are shaping the future of AI agents (of which we spoke recently) and their integration with machine learning (ML) systems. These protocols address distinct but complementary needs in building interoperable, scalable and context-aware AI ecosystems. We refer to Model Context Protocol (MCP) and Agent-to-Agent (A2A).

Model Context Protocol (MCP)

The Model Context Protocol (MCP), was developed by Anthropic and open-sourced in November 2024, is a standardized protocol that connects AI models, particularly large language models (LLMs), to external tools, data sources, and APIs.

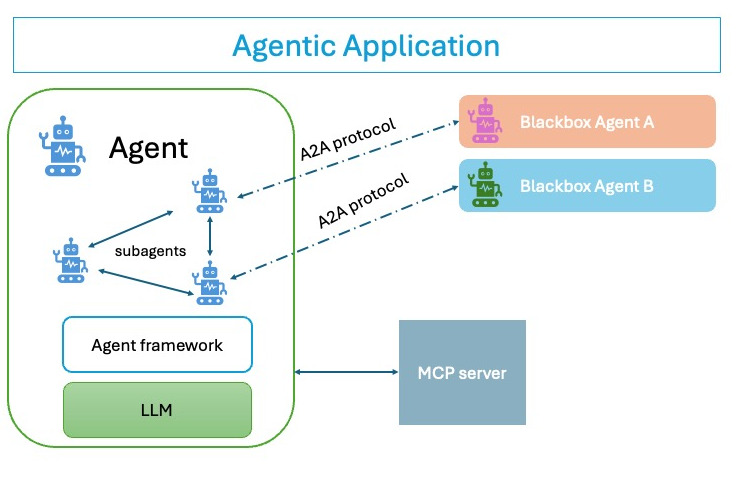

Think of MCP as a universal adapter—like a USB-C port for AI—that streamlines how models access structured context (e.g., databases, files, or business systems) to perform tasks beyond their training data. It operates on a client-server architecture, where an MCP host (the AI application) connects to MCP servers exposing specific capabilities. MCP enhances an agent’s ability to act on real-world data without hardcoding integrations. .

MCP focuses on vertical integration, ensuring seamless interaction between an ML model and its external environment.

Key Features of MCP:

- Tool Integration: Dynamically injects tools, documents, or APIs into an LLM’s context window.

- Standardized Access: Uses OpenAPI-compatible definitions for consistent API interactions, reducing the need for custom integrations.

- Scalability: Supports multiple servers for diverse data sources (e.g., Google Drive, Postgres).

- Flexibility: Enables agents to fetch context or execute actions without predefined prompts. Enables secure, two-way connections to external systems, supporting actions like querying APIs or updating records.

Agent-to-Agent (A2A) Protocol

The A2A protocol , introduced by Google in April 2025, is an open standard designed to enable seamless communication and collaboration between AI agents regardless of their underlying frameworks or vendors.

A2A facilitates agent-to-agent interactions by standardizing task delegation, message exchange, and capability discovery. Built on web standards like HTTP, JSON-RPC 2.0, and Server-Sent Events (SSE), A2A ensures secure, scalable, and multi-modal communication (text, JSON, images, etc.). It allows agents to coordinate complex workflows, share task states, and negotiate data formats, mimicking human team dynamics.

A2A focuses on horizontal integration, enabling direct communication and collaboration between AI agents

Key Features of A2A:

- Capability Discovery: Agents expose “Agent Cards” (JSON-based) to advertise their skills, enabling dynamic discovery.

- Task Management: Supports short- and long-running tasks with real-time status updates.

- Collaboration: Enables agents to exchange structured multi-part messages and artifacts (i.e reports, visuals).

- Security: Incorporates enterprise-grade authentication aligned with OpenAPI standards.

Key Differences Between MCP and A2A

While both protocols empower AI agents, they serve distinct purposes within the agentic ecosystem, horizontal an. Below is a detailed comparison across key dimensions:

| Aspect | MCP | A2A |

|---|---|---|

| Primary Focus | Agent-to-tool/data integration. Connects ML models to external tools and data (vertical integration). | Agent-to-agent communication and collaboration (horizontal integration). |

| Use Case | Connect an agent to external systems for data access or actions. Provides context and tools to a single model or agent. | Coordinating tasks and data exchange between multiple agents across platforms/vendors. |

| Communication Layer | Vertical (agent-to-tool/API). | Horizontal (agent-to-agent). |

| Standards | OpenAPI-based, client-server model. | HTTP, JSON-RPC 2.0, SSE for real-time updates. |

| Data Exchange | Structured context (APIs, documents, functions). | Multi-modal (text, JSON, images, video) with format negotiation. |

| Security | OAuth 2.0, secure server discovery (.well-known/mcp files). | Enterprise-grade authentication, sandboxing to prevent attacks. |

| Complexity | Requires explicit tool definitions, less flexible for collaboration. | Handles dynamic, unstructured interactions. |

| Scalability | Scales with tool integrations, limited to predefined contexts. | Scales with agent ecosystems, supports cross-vendor interoperability. |

| Architecture | Acts as an integration layer for model inputs/outputs. | Defines a communication protocol for agent interactions. |

| Example Task | Querying a CRM database to fetch customer data for an ML model. | An agent delegating a report generation task to another agent. |

| Developed By | Anthropic | Google, with 50+ industry partners |

| Transport | Supports Stdio, WebSocket, HTTP. | Uses JSON-RPC 2.0 over HTTP(S) with SSE for streaming. |

In essence, MCP equips a single ML model or agent with the tools and data it needs to act, while A2A enables multiple agents to collaborate as a team, sharing tasks and results across a network.

When to Use MCP, A2A or Both

MCP

MCP is ideal in scenarios where an ML model needs to interact with external systems, tools, APIs, or data sources to perform actions or retrieve context to complete a task. It eliminates the complexity of custom integrations by providing a standardized way to inject context and trigger actions.

It’s ideal for:

- Tool Integration: When an agent needs direct access to platforms like GitHub, Slack, or databases.

- Context-Aware Actions: When an agent requires runtime context to make informed decisions.

- Standardized Integrations: When simplifying connections to enterprise systems is a priority.

Example: Supply Chain OptimizationA manufacturing company uses an ML model to predict inventory needs. The model, integrated via MCP, connects to:

- A supply chain API to fetch real-time supplier data.

- A warehouse database to check stock levels.

- An ERP system to update purchase orders.

The MCP server handles secure data exchange, ensuring the model can reason over current data and execute actions like placing orders. This setup allows the model to operate autonomously within the company’s ecosystem, improving efficiency without requiring agent-to-agent coordination.

A2A

A2A is ideal for scenarios where multiple AI agents need to collaborate across platforms or organizations, multi-step tasks across different platforms or vendors. It enables agents to discover each other, negotiate tasks, and share results without relying on a centralized controller.

It’s ideal for:

- Multi-Agent Workflows: When specialized agents need to coordinate, delegate, or share results.

- Cross-Vendor Ecosystems: When agents from different providers (e.g., Google, Salesforce) must interoperate.

- Dynamic Interactions: When tasks involve ongoing communication, format negotiation, or long-running processes.

Example: Cross-Organizational Customer SupportA telecom company partners with a third-party repair service. Each runs its own AI agent:

- The telecom’s agent handles customer complaints and diagnostics.

- The repair service’s agent schedules technician visits.

Using A2A, the telecom’s agent sends a task (e.g., “schedule a repair for customer X”) to the repair agent, including diagnostic data as a JSON artifact. The repair agent responds with a confirmation and a scheduled time, streamed via SSE. This interaction happens without either agent needing to understand the other’s internal systems.

Use MCP and A2A Together

When combined, MCP and A2A create a powerful framework for building composable, multi-agent ML systems. MCP provides individual agents with the tools and context they need, while A2A enables those agents to collaborate seamlessly.

- Complex Enterprise Workflows: When tasks require both agent collaboration and tool interactions.

- Scalable Automation: When building modular systems that integrate with existing IT stacks.

- Interoperable Ecosystems: When leveraging agents and tools from multiple vendors.

Example: Financial Fraud DetectionA bank deploys a multi-agent system to detect and respond to fraudulent transactions:

- Agent 1: Fraud Detection Agent (uses MCP)

- Connected via MCP to:

- A transaction database for real-time data.

- An ML model API for scoring transaction risk.

- A notification system to alert compliance teams.

- The agent pulls transaction data, scores it, and flags suspicious activity.

- Connected via MCP to:

- Agent 2: Investigation Agent (uses MCP and A2A)

- Uses MCP to access customer profiles and historical data.

- Receives flagged transactions from Agent 1 via A2A, including a JSON artifact with risk scores.

- Delegates follow-up tasks (e.g., “freeze account”) to a third agent.

- Agent 3: Action Agent (uses MCP)

- Connected via MCP to the bank’s account management system.

- Executes actions like freezing accounts or issuing refunds.

How They Work Together:

- MCP enables each agent to interact with its respective tools and data sources (e.g., databases, APIs).

- A2A facilitates communication between agents, allowing them to share tasks and results (e.g., passing a flagged transaction from Agent 1 to Agent 2).

The result is a cohesive workflow where agents operate independently but coordinate effectively.

As we saw, MCP and A2A are complementary protocols that address distinct but interconnected challenges in ML-driven enterprises. MCP empowers individual models with the context and tools they need to act, while A2A enables agents to collaborate across systems and organizations. Used separately, they solve specific integration or coordination problems; used together, they can unlock sophisticated, scalable AI workflows.

1 thought on “MCP and A2A, a perfect tandem”