These days we are talking with colleagues at work about how to streamline several parallel developments that are being done and that have temporary requirements dictated by the roadmap of different solutions; but above all what we are looking for is to improve the experience of developers so that they can be more productive and at the same time enjoy doing what they do best, programming to deliver value to external or internal customers.

The fact is, as you might expect, it hasn’t always been that way. I got nostalgic remembering when things were “different” so let’s take a quick trip.

Over time, the software development industry has undergone drastic changes, and along with them, the developer experience and the cognitive load associated with it has evolved as well.

From the early days of programming to the current era of digital transformation and the cloud, developers have had to learn new tools, languages and methodologies, adapting (or reinventing themselves in some cases) to keep up with market demands.

Prehistory: The Mainframe Era and Low-Level Programming

Perhaps some young readers are not familiar with the early days of computing, where developers worked mostly with mainframes, green phosphor screens (I remember some experience with a Vax that was used for heating in the university during the cold winters of Valladolid) and low-level programming languages, such as assembly language. For those who do not know assembler, it is a language in which memory and CPU registers are accessed directly to operate with them through calls to system interrupts. Sounds good, doesn’t it? An example of a “Hello world” program might look something like this:

section .data

msg db ‘Hello, world!’, 0xa

section .text

global _start

_start:

; write the message in the console

mov eax, 4 ; Syscall num 4: write

mov ebx, 1 ; file descriptor: stdout

mov ecx, msg ; msg address

mov edx, 13 ; msg lenght

int 0x80 ; call interruption; program exit

mov eax, 1 ; Syscall num 1: exit

xor ebx, ebx ; exit code: 0

int 0x80 ; call interruption

Assembler is a rather cryptic language, but at that time, the developer’s expertise was focused on efficiency and code optimization to make the most of limited hardware resources, and it has that too, it generates very compact and efficient programs. The few debugging tools that were available were rudimentary and as you might expect, the development cycle was slow due to the complexity of the language, manual searches for system services in books like Ralph Brown’s “Interrupt List” and the limited computing and storage capacity.

The Ancient Age: The Era of High-Level Languages

With the increase in computing power, greater abstractions of the code and more complex compilations and linkages could be performed. This is when programming languages such as C, Fortran or Pascal began to emerge, where the use of libraries helped to avoid having to perform the same tasks in a repetitive manner and abstracting the programmer from the details of their implementation. With this, much more complex developments began to be carried out.

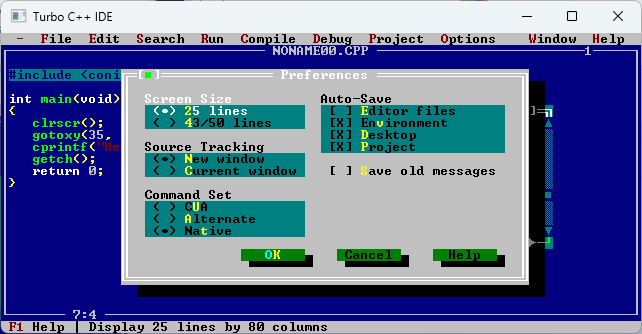

It was around this time that IDEs (Integrated Development Environments) also appeared, where it was possible to program, debug and read documentation from the same application. The first ones were based on command line, like Borland’s one.

The Middle Ages: The Era of Desktop Applications

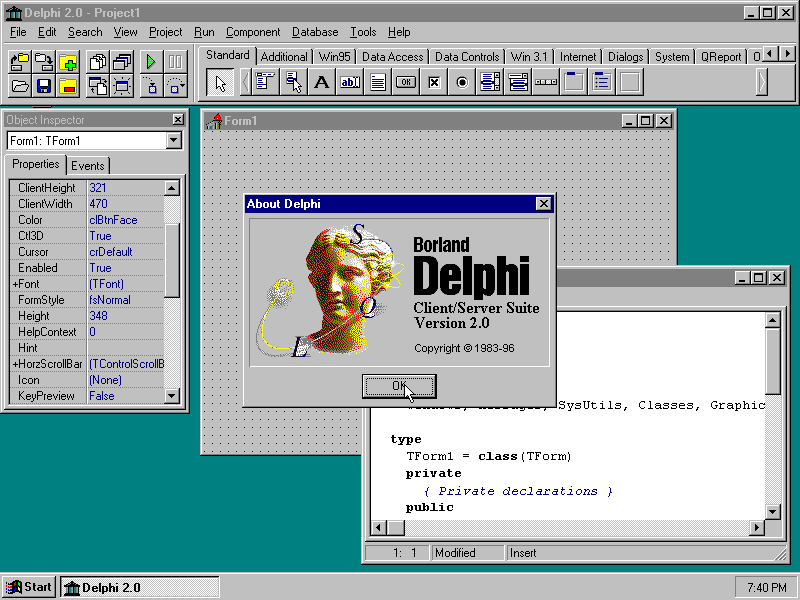

With the rise of the use of graphical environments such as System1, X Window System, or later with Windows 3.1 and especially Windows 95, IDEs and programming languages modernized and offered improvements in the experience. Two clear examples were Delphi (source of inspiration for my first book) and VisualBasic with which desktop applications could be easily made, drawing the application screens by simply clicking and dragging the desired objects on an emulation of the final program screen and adding code to each object. These IDEs also had all the debugging and testing tools inside the application itself, which could be accessed by a simple click and all very visual.

At that time, development was done in waterfall, following a sequential and linear approach to software development, where each stage was completed before moving on to the next. This required exhaustive planning and resulted in long, stiff, lopsided (and sometimes garbage) projects.

But in this respect something was changing. In the mid-1990s, terms like Extreme programming appeared, making way for agile methodologies.

The Modern Age: The Web and Enterprise Applications Era

The popularization of the Internet shifted the focus of application development from the desktop to the web, and with it the developer experience. In this era, companies do not want to miss the Internet train and are launching portals for their customers and employees, moving developers towards the generation of web and enterprise applications. Languages such as Java and PHP became widely used, and new development methodologies focused on collaboration and continuous delivery emerged.

This is when frameworks such as Ruby on Rails, Django and ASP.NET appeared, allowing developers to build web applications faster and more efficiently, providing predefined structures and libraries, without having to write all the HTML code of the pages manually.

More demands and changes on the part of companies mean that the pace at which solutions are expected to be delivered is increasing, with agile methodologies such as Scrum or Kanban, where collaboration, adaptability and continuous delivery are emphasized. This way of working allows greater flexibility and a better response to changes in project requirements compared to the rigidity of waterfall techniques.

The Contemporary Age: The Cloud Era and the Rise of DevOps

With the capability and flexibility offered by cloud computing, the developer experience is focused on creating scalable and highly available applications. Developers also take on broader roles in the development lifecycle, collaborating closely with operations (DevOps) and security (DevSecOps) teams.

Platforms such as Amazon Web Services (AWS), Google Cloud Platform (GCP) or Microsoft Azure provided scalable Infrastructure as a Service (IaaS) and Platform as a Service (PaaS) services, allowing teams to focus more on developing without worrying about managing infrastructure or certain services such as replication or load balancing.

It is common practice to use CI/CD to automate the building, testing and deployment of applications on an ongoing basis. Tools such as Jenkins or GitLab among many others, facilitate these automatic integration and delivery tasks.

The Future Age: The era of No code, artificial intelligence and platforms.

In DevOps development teams, especially for cloud applications, the cognitive load on people is increasing. There are more and more technologies and tools that developers need to know in order to be able to do their job properly. Knowing how to configure and use each piece of software that makes up a modern development is not trivial and this makes developers need part of the time they would be spending on other tasks or learning how to use something new. If we look at it from the perspective of what is involved for a junior programmer, it can take several months to become fully operational after learning to use all the utilities and techniques used in development. Couple this with the lack of available staff and the increase in demand and you have the perfect storm.

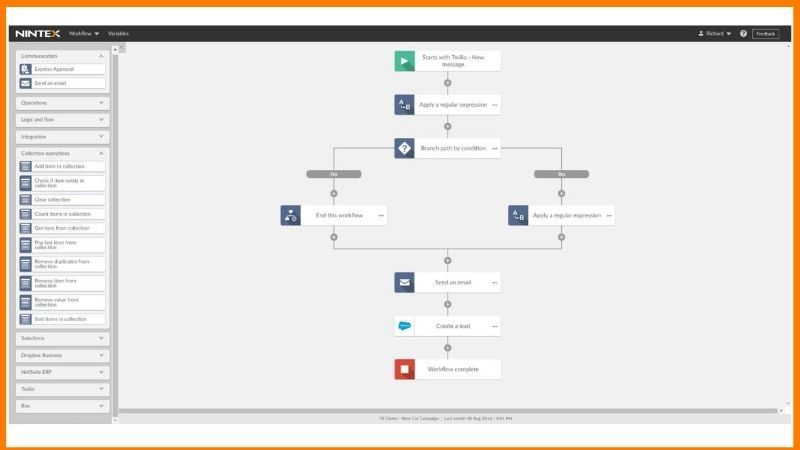

It is then where we can talk about technologies such as low code / no code, which offers an even higher level of abstraction that allows you to create applications without writing code or really little code.

On the other hand, we are already seeing how artificial intelligence is bursting into the programming world with examples such as GitHub Copilot, Synk Code or the ultra well-known ChatGPT, and not only to create code, but also to explain it (very useful in legacy or poorly documented systems), detect security flaws or improve performance.

Among all the types of platforms that can exist in the IT world, platforms such as Azure, AWS or GCP, already mentioned above, are of special relevance for programmers. These platforms allow the developer to focus on much more development aspects and lighten or even forget about topics such as infrastructure, backups or balancing among other things.

When we enter the corporate world, these platforms are often too generic and developers are only interested in a certain part of them and the services already developed within the company itself, which is where the internal development platforms or IDP come into play. The purpose of these platforms is to reduce the cognitive load of developers by improving their experience by serving them information, technologies, templates and tools, through easy access and facilitating self-service. This is a large and interesting topic that is worth talking about in more depth another day.

But the future is the future, and we do not know which tools and methodologies will prevail, although it is true that some are already beginning to emerge.