Artificial Intelligence (AI) has been in the spotlight for the last decade, driving significant changes in most sectors. Within AI, one subfield that is gaining traction is generative AI and more so lately, with it being difficult in some cases to differentiate between human-generated and AI-generated. Not only does this technology have the potential to change the way businesses operate, but it is also influencing adjacent markets, such as semiconductors.

The growing demand for generative AI is having a significant impact on the semiconductor market. AI models require a large amount of processing power for training and operation. This has led to an increase in demand for advanced semiconductor chips.

As a result, shares of semiconductor companies have skyrocketed, as investors anticipate continued growth in demand for these chips. Generative AI, therefore, is not only transforming the business world in sectors where it is used directly, but is also driving changes in related markets.

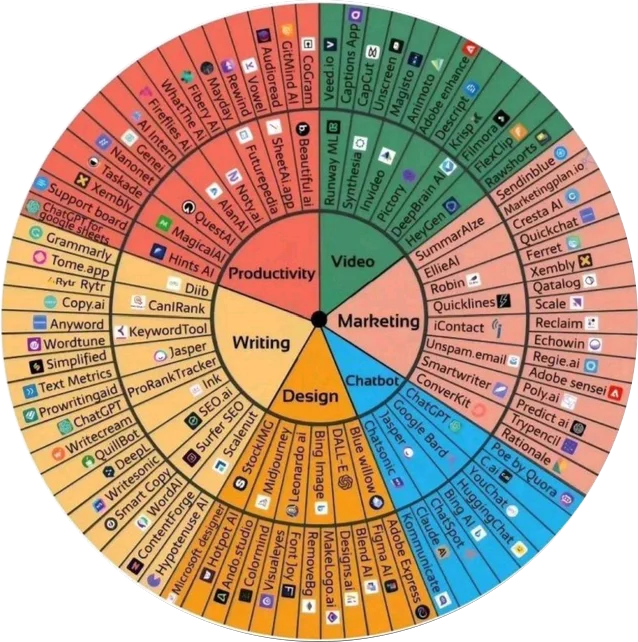

Generative AI refers to systems that can generate something new from input data. These systems can create anything from text, images and music to complex videos. Examples can be found almost everywhere, from programming assistants such as GitHub Copilot or AWS CodeWhisperer to image generation such as in Canva or Adobe Firefly.

A prominent example of generative AI is GPT (Generative Pretrained Transformer), developed by OpenAI. There are currently many tools available for working with generative AI of this type, although perhaps the best known is ChatGPT. We have all been able to play with ChatGPT and see that it is a particularly powerful tool, capable of generating coherent and relevant text from a series of prompts. With this technology it is possible to perform multiple tasks such as automating customer service responses, freeing up employees to focus on more complex tasks. So much so, that companies are beginning to incorporate profiles dedicated to asking AI tools in a precise way to do things for which they were not specifically pre-trained, this is what is called “prompt engineering”.

However, the path is not free of challenges. While these tools can generate impressively coherent responses or near-realistic images, it can sometimes produce incorrect or misleading answers. In addition, it can be susceptible to biases in the training data, which can result in skewed or biased responses.

When asked if it can replace us, it is worth going into details of the tasks to be performed, sometimes it can replace the worker, sometimes it can help and sometimes it simply cannot be there (no plumber’s work is threatened at the moment by ChatGPT or similar).

How can it help us?

The quick answer is in many ways. For instance, to see how ChatGPT could help us, let’s imagine that we have to make an introduction to the use of Business Model Canvas to our team.

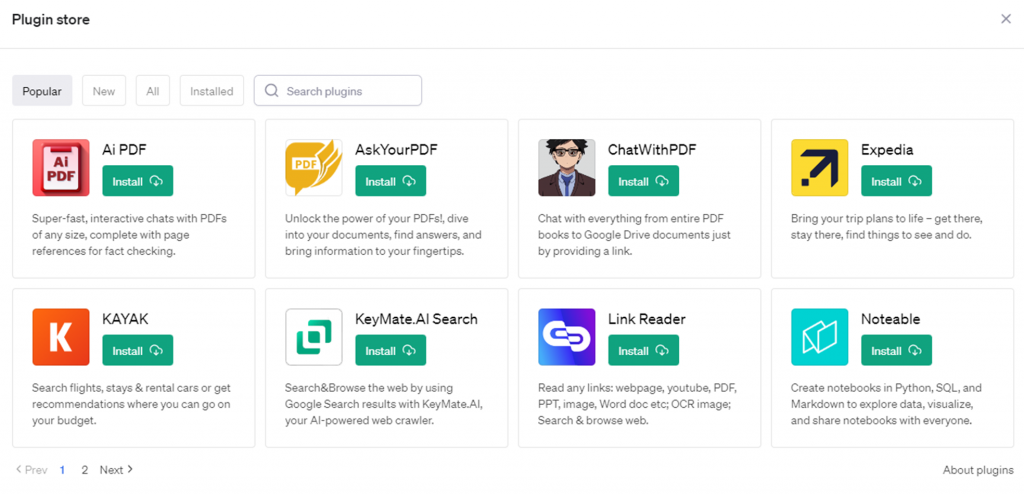

For this, we can ask ChatGPT for some help. To get more complete results we will rely on the use of its plugins.

We will activate three of them:

- ChatWithVideo

- Show Me Diagrams

- HeyGen

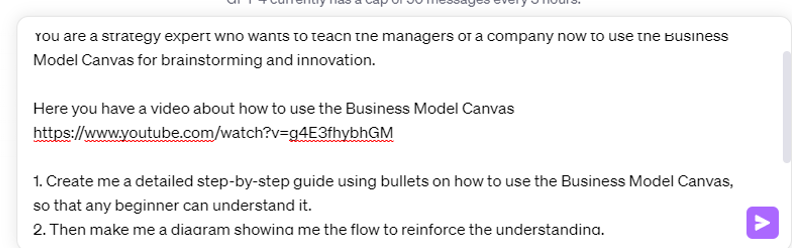

The prompt will be:

Hello,

You are a strategy expert who wants to teach the managers of a company how to use the Business Model Canvas for ideation and innovation.

Here you have a video about how to use the Business Model Canvas https://www.youtube.com/watch?v=g4E3fhybhGM

- Create me a detailed step-by-step guide using bullets on how to use the Business Model Canvas, so that any beginner can understand it.

- Then make me a diagram showing me the flow to reinforce the understanding.

- Create me a summary of about 150 words on how to use the Business Model Canvas.

- Create me a video about it.

Thank you

Yes, I always say hello and thank you, it’s free and besides, just in case “Maximum Overdrive” comes true and they take revenge😊.

It will start by analyzing the video we have provided. After that it will detail the steps to be taken to fill in the Business Model Canvas.

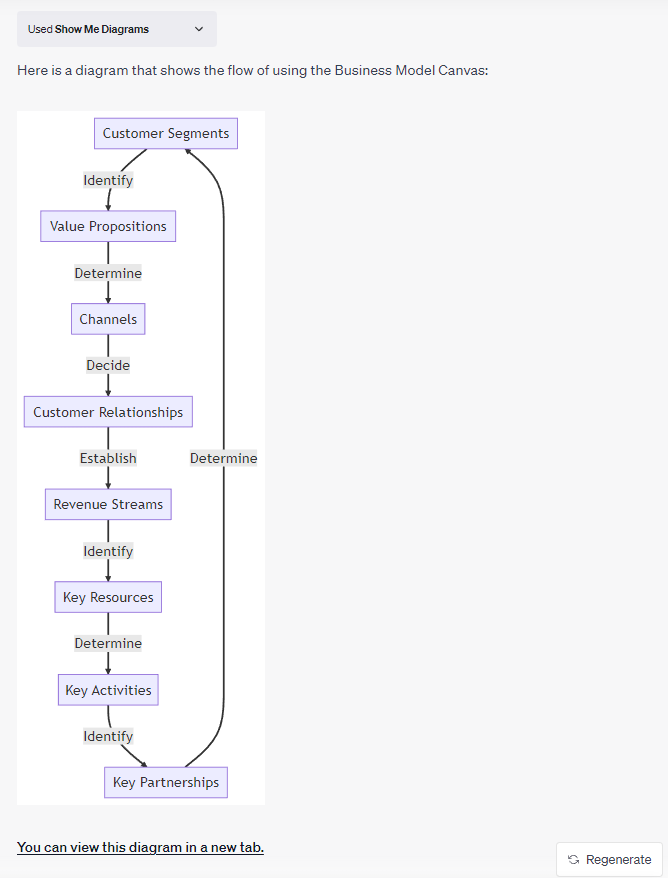

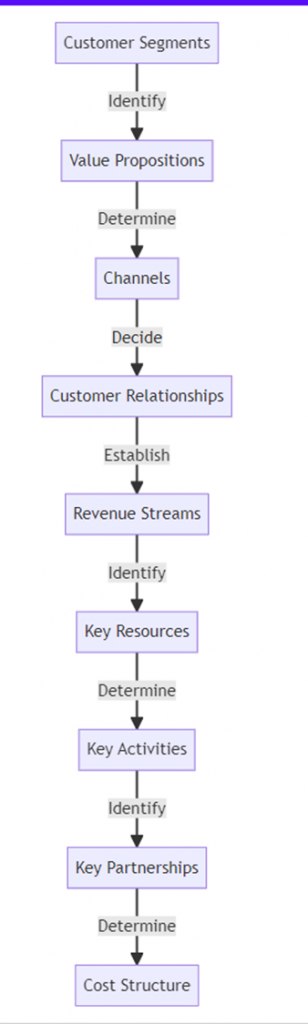

As a next step, it will draw us a diagram of the process.

And it will end by offering us a summary and the explanatory video generated (the result here).

Not bad, isn’t it? But it is not completely perfect, we see some flaws. A very interesting one is that in the step-by-step description there are 9 steps and in the graph there are only 8. This is because in the generation, the ninth step has the same initials as step 1 (“Customer Segments” “Cost Structures” -> “CS”), so the graph comes out iterative. The good part is that the ChatGPT plugins also offer us the option to adjust the outputs, in this case, the diagram would look like this.

We found that in a few minutes we had a step-by-step guide, a diagram and video that could help us speed up our work. But we have also seen that it was not quite right, requiring human intervention. It is also possible that the depth of detail we get in the first instance does not match what we expected. To obtain more detail we can perform successive queries on that particular point. In short, it can help us speed up many of our tasks, but we must always be alert to possible failures, biases or inconsistencies.

Risks

Although we feel very comfortable talking to the chat, we must keep in mind that every word we write is being handed over to a private company, and very few of them clearly explain how they are going to use that data.

For example, since we are talking about ChatGPT (although others like Bard are in the same situation), in their terms of use they say that they can keep part of the conversations to improve their answers. Sometimes they can even be reviewed by humans.

Generally speaking, we should not share any data that could compromise our security, the security of our people or that of the company we work for. Almost all companies have a department in charge of regulating the situations in which it is possible to work with AI, what information can be distributed, and where to ask questions related to it (and companies that do not have one should work on it because of the risks involved in not having one).

Some of the clearest cases of information that we should not share may be:

- Passwords. These chats usually store their data in public servers, often without encryption or without the necessary security measures that a password needs, so we would be exposing our passwords to the whole world. If there is a breach in the server, hackers can access the passwords and exploit them to cause economic and/or personal damage.

- Personal data such as residence. It is important not to share personal data (also known as PII Personal Identification Information ) with AI chatbots under any circumstances. Again, a breach in the servers can cause economic and/or personal havoc. Aspects such as political affiliations, religious affiliations, personal health issues, family relationships, etc, can be exploited in a perverse way if they fall into the wrong hands.

- Financial details. Even though companies claim to anonymize data from conversations, third parties and some employees may still have access to them. If personalized financial advice is needed, there are better options than relying on artificial intelligence bots. These bots may give us inaccurate or misleading information and we will have no one to complain to and no legal support. For these cases it is better to seek advice from a licensed financial advisor who can provide reliable and personalized guidance.

- Intimate thoughts. It is very important to know that chatbots lack real-world knowledge and know things as they have been trained, so they are only capable of providing generic answers (although they may seem accurate to us). This can mean that they may tell us medications or treatments that are not may not be suitable for specific needs and harm health. Furthermore, if this information falls into the wrong hands, malicious people could exploit this information by selling the data on the dark web, spying or blackmail. Therefore, safeguarding the privacy of personal thoughts when interacting with chatbots is of utmost importance.

- Confidential work information. A fairly recent case has been that of one company where employees were using ChatGPT to develop software, but in doing so they used highly confidential company data, losing control over it and making it accessible to others. It is essential to exercise caution when sharing code, sensitive information or work-related details.

Deus Ex Machina

“Deus ex machina” is a Latin phrase that literally translates as “god from the machine.” It originated in ancient theater, when a crane (the “machine”) was used to lower actors playing gods to the stage at the climax of a play, usually to resolve a plot in a surprising and miraculous way, leading to sometimes implausible outcomes. When working with AI, if the generative model produces results that seem surprisingly good without a realistic basis or without coherent reasoning, they may seem like “magic” results, without a real understanding of the logic or the rules they should follow. In developing applications with generative AI, it is important to ensure that the results generated are consistent and based on real data and knowledge to avoid a “deus ex machina” feeling.

As we can see, we are in an exciting new era, not without risks and uncertainties, but what is certain is that AI is here to stay and will be increasingly present in our lives.

omg.are we in the new industrial revolution?

IA is here to say. it’s clear

An excellent read that will keep readers – particularly me – coming back for more!